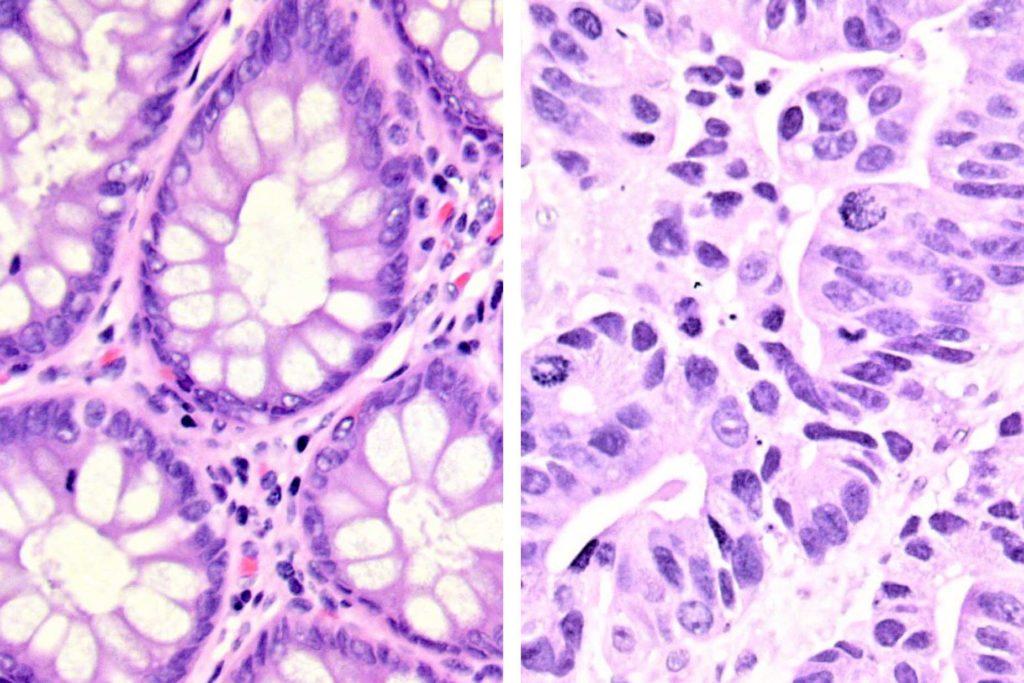

Inside a laboratory at the James A. Haley Veterans' Hospital in Tampa, Florida, machines are rapidly processing tubes of patients' body fluids and tissue samples. Pathologists examine those samples under microscopes to spot signs of cancer and other diseases.

But distinguishing certain features about a cancer cell can be difficult, so Drs. Stephen Mastorides and Andrew Borkowski decided to get a computer involved.

In a series of experiments, they uploaded hundreds of images of slides containing lung and colon tissues into artificial intelligence software. Some of the tissues were healthy, while others had different types of cancer, including squamous cell and adenocarcinoma.

Then they tested software with more images the computer had never seen before.

"The module was able to put it together, and it was able to differentiate, 'Is it a cancer or is it not a cancer?'" Borkowski said. "And not only that, but it was also able to say what kind of cancer is it."

The doctors were harnessing the power of what's known as machine learning. Software pre-trained with millions of images, like dogs and trees, can learn to distinguish new ones. Mastorides, chief of pathology and laboratory medicine services at the Tampa VA, said it took only minutes to teach the computer what cancerous tissue looks like.

The two VA doctors recently published a study comparing how different AI programs performed when training computers to diagnose cancer.

"Our earliest studies showed accuracies over 95 percent," Mastorides said.

Enhance, not replace

The doctors said the technology could be especially useful in rural veterans clinics, where pathologists and other specialists aren't easily accessible, or in crowded VA emergency rooms, where being able to spot something like a brain hemorrhage faster could save more lives.

Borkowski, the chief of the hospital's molecular diagnostics section, said he sees AI as a tool to help doctors work more efficiently, not to put them out of a job.

"It won't replace the doctors, but the doctors who use AI will replace the doctors that don't," he said.

The Tampa pathologists aren't the first to experiment with machine learning in this way. The U.S. Food and Drug Administration has approved about 40 algorithms for medicine, including apps that predict blood sugar changes and help detect strokes in CT scans.

The VA already uses AI in several ways, such as scanning medical records for signs of suicide risks. Now the agency is looking to expand research into the technology.

The department announced the hiring of Gil Alterovitz as its first-ever Artificial Intelligence Director in July 2019 and launched The National Artificial Intelligence Institute in November. Alterovitz is a Harvard Medical School professor who co-wrote an artificial intelligence plan for the White House last year.

He said the VA has a "unique opportunity to help veterans" with artificial intelligence.

As the largest integrated health care system in the country, the VA has vast amounts of patient data, which is helpful when training AI software to recognize patterns and trends. Alterovitz said the health system generates about a billion medical images a year.

He described a potential future where AI could help combine the efforts of various specialists to improve diagnoses.

"So you might have one site where a pathologist is looking at slides, and then a radiologist is analyzing MRI and other scans that look at a different level of the body," he said. "You could have an AI orchestrator putting together different pieces and making potential recommendations that teams of doctors can look at."

Alterovitz is also looking for other uses to help VA staff members make better use of their time and help patients in areas where resources are limited.

"Being able to cut the (clinician) workload down is one way to do that," he said. "Other ways are working on processes, so reducing patient wait times, analyzing paperwork, etc."

Barriers to AI

But Alterovitz notes there are challenges to implementing AI, including privacy concerns and trying to understand how and why AI systems make decisions.

In 2019, DeepMind Technologies, an AI firm owned by Google, used VA data to test a system to predict deadly kidney disease. But for every correct prediction, there were two false positives.

Those false results may cause doctors to recommend inappropriate treatments, run unnecessary tests, or do other things that could harm patients, waste time, and reduce confidence in the technology.

"It's important for AI systems to be tested in real-world environments with real-world patients and clinicians because there can be unintended consequences," said Mildred Cho, the Associate Director of the Stanford Center for Biomedical Ethics.

Cho also said it's important to test AI systems with a variety of demographics because what may work for one population may not for another. The DeepMind study acknowledged that more than 90 percent of the patients in the dataset that it used to test the system were male veterans, and that performance was lower for females.

Alterovitz said the VA is taking those concerns into account as the agency experiments with AI and tries to improve upon the technology to ensure it is reliable and effective.

This story was produced by the American Homefront Project, a public media collaboration that reports on American military life and veterans. Funding comes from the Corporation for Public Broadcasting.

Copyright 2020 North Carolina Public Radio – WUNC. To see more, visit North Carolina Public Radio – WUNC.

9(MDEyMDcxNjYwMDEzNzc2MTQzNDNiY2I3ZA004))